Software localization includes steps such as:

- Preparing the code for translation

- Exporting the software strings

- Fine-tuning parsing and segmentation strategies

- Developing a key terminology list (glossary)

- Creating the software translation project

- Assigning translators

- Assigning to reviewers

- Query management

- Automated QA checks

- Re-importing translated strings

- QA checks either in the staging environment or of screenshots

- Testing

In this article we will explore these different components and their implications in the overall localization process.

Preparing the code for translation

While some software is coded with translation in mind, it’s often that translation is a requirement that pops up when the software is already mature. In order to prepare the code for translation, a closer look needs to be paid to variables, dates, and the overall architecture of translatable content vs. code. Dates, currency, and other factors change formats according to region. If they are hard-coded they will need to be re-coded as dynamic entities in order to allow for inter-cultural flexibility. Depending on how the code is written it may be very easy or hard to isolate code from translatable text. This will impact the next step in exporting the strings but it is perhaps the most overlooked and underestimated aspect of software localization. Every single ripple in code vs. text simplicity will result in countless waves that will impact the localization process as a whole. It may result in scenarios where it’s challenging to either preserve the integrity of the code or make it friendly for translators. Sure, you can overcome some of these challenges through parsing and segmentation strategies, but the cleaner you can export translatable content without code interfering, the better (more sustainable and scalable) your software localization process will be.

Exporting the software strings

Exporting the strings should be a straightforward process. But it’s not. Certain formats are go-to while others should be avoided. Examples of go-to formats: XML, YAML, JSON. They are structured, predictable, easily parseable, and relatively easy to find patterns within the code which cuts down on issues resulting in translating to different languages. Examples of formats to be avoided: TXT, CSV, Mixed code. A CSV export for instance can become a nightmare. Delimiting characters such as semi-colons are necessary as linguistic tools. It’s virtually impossible to distinguish algorithmically between a semi-colon that is meant as a code break vs. a semi-colon that is meant as a linguistic tool. This creates hundreds of false positives that need to be checked during the Quality Assurance process as well as issues during the re-import of code etc. With mixed code for instance we are referring to XML with JSON in it as an example which add toaddse complexity of the parsing and segmentation process. Encoding is also a key pain point:

- HTML Encoding

- URL Encoding

- Unicode Encoding

- Base64 Encoding

- Hex Encoding

- ASCII Encoding

Each of these encoding frameworks will have ramifications in the localization process, including character incompatibility depending on the encompassed languages.

Fine-tuning parsing and segmentation strategies

If you’ve followed the best practices laid out above, your parsing and segmentation strategies will be process optimizers. If you haven’t, parsing and segmentation will become process enablers. As process optimizers, a fine tuned parsfine-tunedgmentation strategy will ensure content is ingested by your translation management system in a friendly way for translators and reviewers. This is where you can ensure that variables are protected, any remaining code gets protected, and that the text gets broken in ways that make sense for the translation process.If you have not done your homework this is the step where things can get crazy. Either because it’s just impossible to create enough parsing to protect the code and variables or because it will take an insane level of effort to write enough regular expressions to make the software content more translation friendly. Either way, this is a pivotal step. If you change parsing and segmentation strategy over time, you will experience a loss in translation memory leveraging which will create extra costs and process complexity. It may not seem like a big deal until it blows up on your face. Let’s assume for instance that your entire software has 100,000 words and that you are translating into 10 languages and that your average cost per word is $0.15. Let’s assume that you’ve translated your software but now iterate on your parsing strategy but this will cause a 10% loss of leveraging (which can be an expected result of a minor change in parsing), that’s $15,000 lost right off the gate, not to mention the extra time needed and other ramifications.

Developing a key terminology list (glossary)

Anyone can develop a glossary. Very few can develop a great one. Some people go the statistical route and mine a content database for most recurring keywords. And while that expedites things and captures terms that are important based on frequency, several key terms are not necessarily that frequently used.Some people take the qualitative approach and have translators go through troves of content and manually identify relevant terms. Others use AI to look for linguistic patterns and identify entities and terms not necessarily based only on frequency but on their overall semantic relevance.Regardless of the approach, when it comes to a glossary, less is more. While you want to make sure that you captured the essential terms, if you flag too many terms as glossary terms, it is nearly impossible to ensure governance over proper application. Too many false positives and alerts make it challenging for translators and reviewers to manage the term suggestions and to run automated QA checkers. Another tip with glossaries is that it’s as important to flag terms that should not be translated as those that do require a specific kind of translation.

Creating the software translation project

This step is highly contingent on the framework of the translation management system you are using. Some systems will allow you to manage the entire project lifecycle within the same environment and project while other platforms will require a different time of approach which could be string or file-based. While it may seem like a formality, setting up the actual translation project has key checkpoints such as:

- Ensuring files were ingested in their totality

- Ensuring that language pairs are correct down to the locale level

- Ensuring that dates are correct

- Ensuring that workflow steps are the necessary ones

- Assigning to translators

In this step, we are assuming that you already have a previously vetted team of translators at your availability per language pair. It’s key to work with translators that are:

Same as with translators with the added emphasis on critical discernment. You need reviewers who are critical enough to understand the differences between style and errors. Reviewers who change too much at this stage of the process compromise the integrity of the translation process as a whole. Here is where Bureau Works proprietary quality management framework comes in hand.

Query management

A key part of software translation is being able to address questions that arise during the translation process. For example, what does button “X” stand for, or what does this variable point to? It’s important to have a process that allows you to ask questions regarding the source language and have the answers available to all translators on the project regardless of their language pair in order to minimize having to answer the same question over and over. It’s also key to have a way to assign different statuses to the query such as new, assigned, solved, and so on so that your project management team can ensure queries are answered in a timely manner.

Automated Quality Assurance checks

Critical to any software localization process, automated QA checks ensure that certain key items are flagged such as:

- Trailing spaces

- Character limits

- Inconsistent punctuation

- Glossary non-adherence

- Spelling

- Inconsistent tags (mismatches in source and translated code)

- Re-importing translated strings

If you’ve gotten this far alive, you are doing something right! :) It can be less painful, but you are here. Now it’s time to reimport the strings back into your repository and rebuild the software. If you’ve missed or mismanaged any of the best practices outlined previously you may struggle at this step with issues in the reimport process due to breaks in code, tag mismatches, or other issues that could impact successful code reuptake.

Automated QA processes can also integrate with tools like automated identity verification to enhance security and ensure data accuracy during localization efforts.

Souce: Linkedin

QA checks either in the staging environment or of screenshots

This step can be ignored if it’s merged with testing, but it’s helpful to provide translators with access to either a staging environment or screenshots of main screens to ensure that strings show properly and there are no glaring issues that prevent testing from starting.

Testing

This is a stage that drives quality beyond the obvious bug detection and fixes. Testing allows another fresh look at the translated strings in context. Some translations that seemed perfect during the translation process, may not be the best from a User Experience standpoint. It’s important to work with testers that are committed to looking to improve user experience and not just reactively look for bugs.

Conclusion

Software localization is an extensive, expensive, and complex process. But it’s not just that. It’s iterative and continuous which means you will go countless times through the same process in order to update your software as it continues to evolve. This is a high-level and superficial overview of a process that can become infinitely more nuanced and complex to handle. But the key takeaway is that it’s worth every penny to invest in a framework and best practices from the very beginning. Once the ship has sailed it will become incrementally more challenging and expensive to fix it.

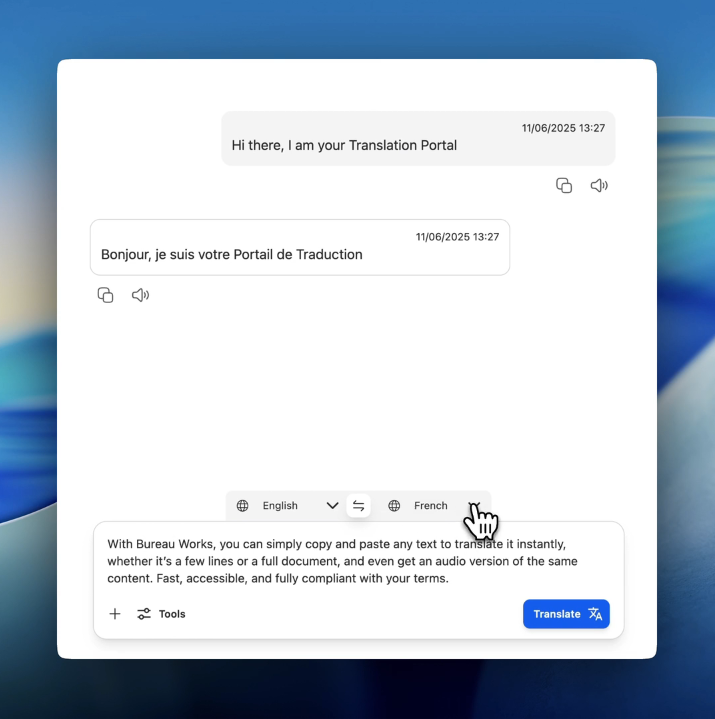

Unlock the power of glocalization with our Translation Management System.

Unlock the power of

with our Translation Management System.

.avif)